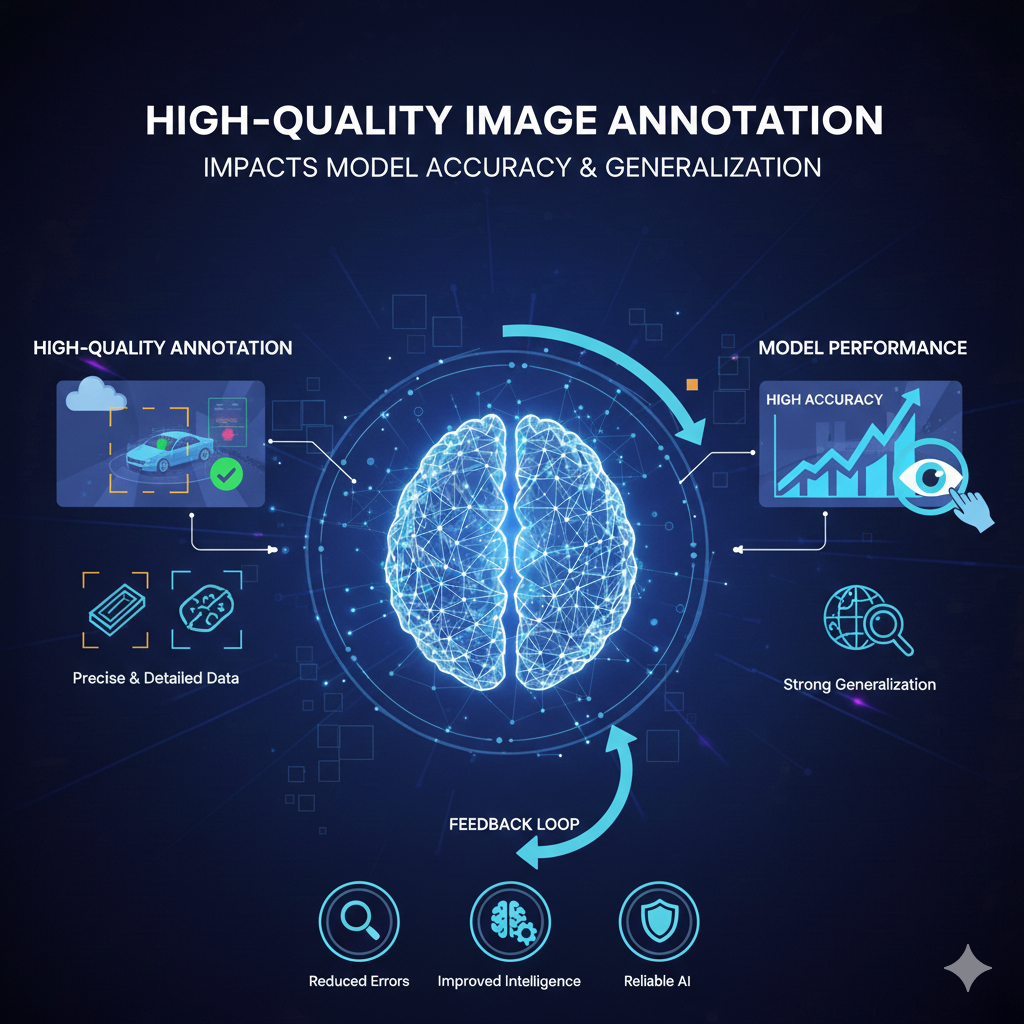

As artificial intelligence systems increasingly rely on computer vision, the quality of image annotation has emerged as a decisive factor in determining model performance. While sophisticated architectures and large-scale training pipelines attract much attention, the foundational role of labeled data is often underestimated. In practice, even the most advanced algorithms cannot compensate for inaccurate, inconsistent, or biased annotations. For organizations building vision-driven AI products, partnering with a reliable data annotation company and adopting rigorous annotation standards directly influences both model accuracy and long-term generalization.

This article explores how high-quality image annotation affects model outcomes, why annotation errors propagate into production risks, and how professional image annotation outsourcing helps organizations scale without sacrificing data integrity.

Image Annotation as the Ground Truth of Computer Vision

In supervised learning, annotated images serve as ground truth. Bounding boxes, polygons, keypoints, and segmentation masks teach a model how to perceive objects, relationships, and spatial context. The model does not “see” the world as humans do; it learns statistical patterns strictly from labeled examples.

If annotations are ambiguous or incorrect, the model internalizes those inaccuracies as truth. Over time, this degrades predictive performance, introduces bias, and increases error rates during real-world deployment. High-quality image annotation, by contrast, provides clean signal, enabling models to converge faster and learn more robust representations.

Direct Impact on Model Accuracy

Model accuracy is the most visible outcome of annotation quality. Precise labeling ensures that features extracted during training correspond to the intended object classes and boundaries.

For example, in object detection tasks, poorly aligned bounding boxes introduce noise into localization loss functions. This leads to inconsistent predictions, even if classification accuracy appears acceptable during validation. Similarly, in semantic segmentation, pixel-level inaccuracies distort class boundaries, reducing intersection-over-union (IoU) scores and downstream usability.

A professional image annotation company enforces annotation guidelines, inter-annotator agreement checks, and multi-stage quality audits. These controls significantly reduce label variance and improve training stability. The result is higher accuracy with fewer training iterations and lower compute costs.

Annotation Quality and Model Generalization

While accuracy measures performance on known data, generalization determines how well a model performs on unseen, real-world inputs. This is where annotation quality becomes even more critical.

Generalization depends on whether annotated datasets adequately represent edge cases, environmental variability, and real-world noise. Inconsistent labeling across similar images can cause models to overfit superficial patterns rather than learn invariant features.

High-quality annotation emphasizes:

-

Consistency across datasets: Uniform class definitions and annotation rules.

-

Coverage of edge cases: Occlusions, motion blur, rare object instances, and lighting variations.

-

Balanced representation: Avoiding over-representation of specific classes or conditions.

A specialized data annotation company brings domain expertise and scalable workflows that ensure datasets reflect real operational environments. This allows models to generalize beyond laboratory conditions and perform reliably in production.

Error Propagation and Hidden Costs of Poor Annotation

Annotation errors rarely remain isolated. Once embedded in training data, they propagate throughout the model lifecycle. Mislabels influence feature learning, affect hyperparameter tuning, and mislead evaluation metrics. Over time, these errors manifest as production failures that are costly to diagnose.

Common downstream impacts include:

-

Increased false positives or false negatives in safety-critical applications.

-

Model drift amplified by weak baseline annotations.

-

Re-annotation and retraining costs that exceed initial savings from low-quality labeling.

Organizations that rely on ad hoc labeling or unvetted crowdsourcing often face these challenges. Strategic data annotation outsourcing mitigates such risks by embedding quality assurance into every stage of the annotation pipeline.

Human Expertise Versus Automated Labeling

Automation has improved annotation throughput, but fully automated labeling still struggles with ambiguity, contextual understanding, and edge cases. Human-in-the-loop workflows remain essential for maintaining annotation fidelity.

Experienced annotators can interpret complex scenes, apply nuanced judgment, and adapt guidelines when encountering novel scenarios. When supported by AI-assisted tools, human annotators achieve both speed and precision.

Leading image annotation outsourcing providers combine automation with expert review, ensuring that efficiency gains do not compromise label quality. This hybrid approach delivers scalable datasets while preserving accuracy and consistency.

Quality Control Frameworks That Drive Better Outcomes

High-quality image annotation is not accidental; it is the result of structured quality control frameworks. These typically include:

-

Annotation guidelines and taxonomies aligned with model objectives.

-

Training and calibration of annotators to reduce subjectivity.

-

Multi-pass validation with independent reviewers.

-

Statistical sampling and audits to detect systemic errors.

A mature data annotation company treats annotation as an engineering discipline rather than a clerical task. This mindset ensures traceability, accountability, and continuous improvement across large datasets.

Strategic Value for AI-Driven Organizations

For startups, high-quality annotation accelerates time-to-market by reducing iteration cycles and improving early model performance. For enterprises, it ensures scalability, compliance, and consistent results across multiple AI initiatives.

By leveraging professional data annotation outsourcing, organizations gain access to specialized talent, scalable infrastructure, and proven processes without diverting internal resources from core innovation. This strategic alignment allows teams to focus on model design and deployment rather than data remediation.

Conclusion

High-quality image annotation is a foundational investment that directly impacts model accuracy, robustness, and generalization. It determines how effectively a model learns, how reliably it performs in production, and how costly it is to maintain over time.

Organizations that treat annotation as a strategic function—supported by a trusted image annotation company and disciplined image annotation outsourcing practices—position themselves for long-term success in computer vision. At Annotera, we believe that precision in data is precision in outcomes, and that superior annotation is the cornerstone of scalable, trustworthy AI systems.

By prioritizing quality at the data level, businesses can unlock the full potential of their models and confidently deploy AI solutions that perform in the real world, not just in controlled benchmarks.